0 saved

0 saved

18.6K views

18.6K views

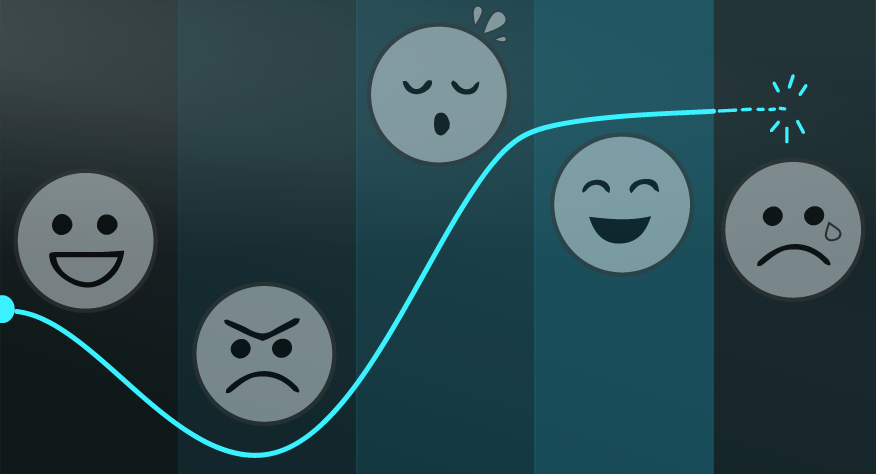

It seems like a good idea, but how do you really know if it works, creates impact, or delivers value?

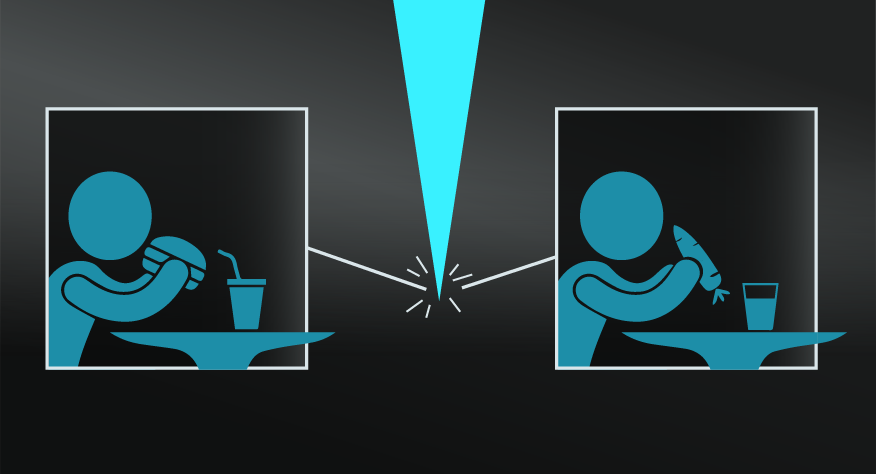

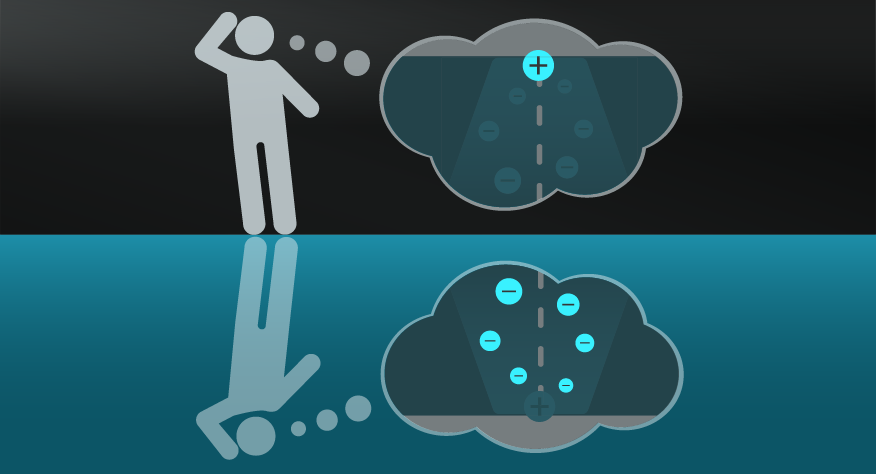

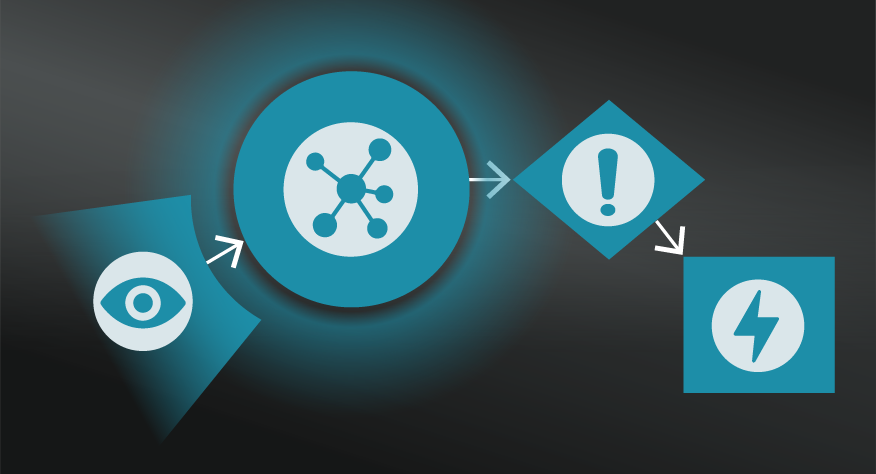

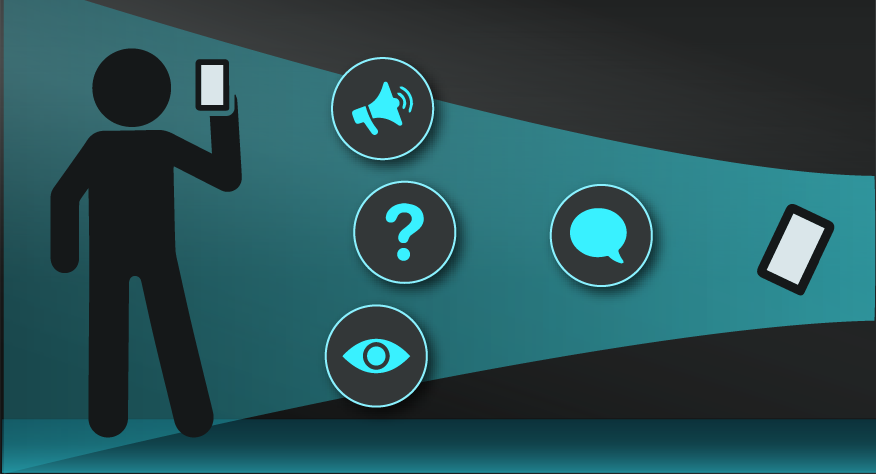

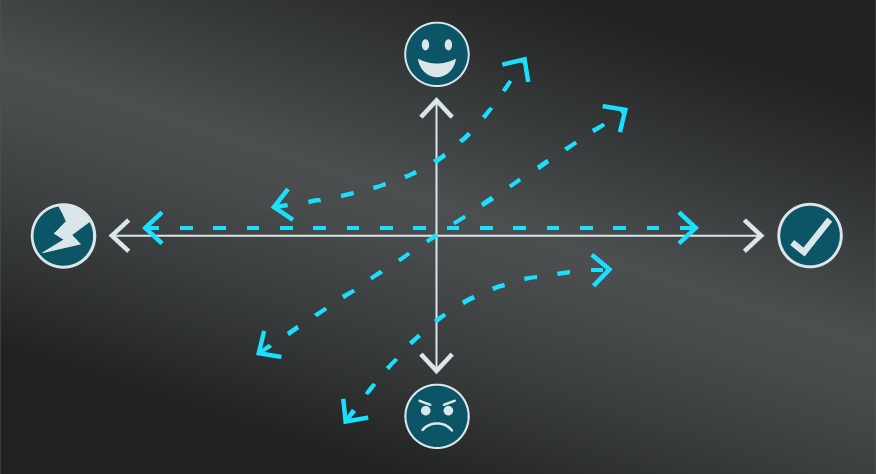

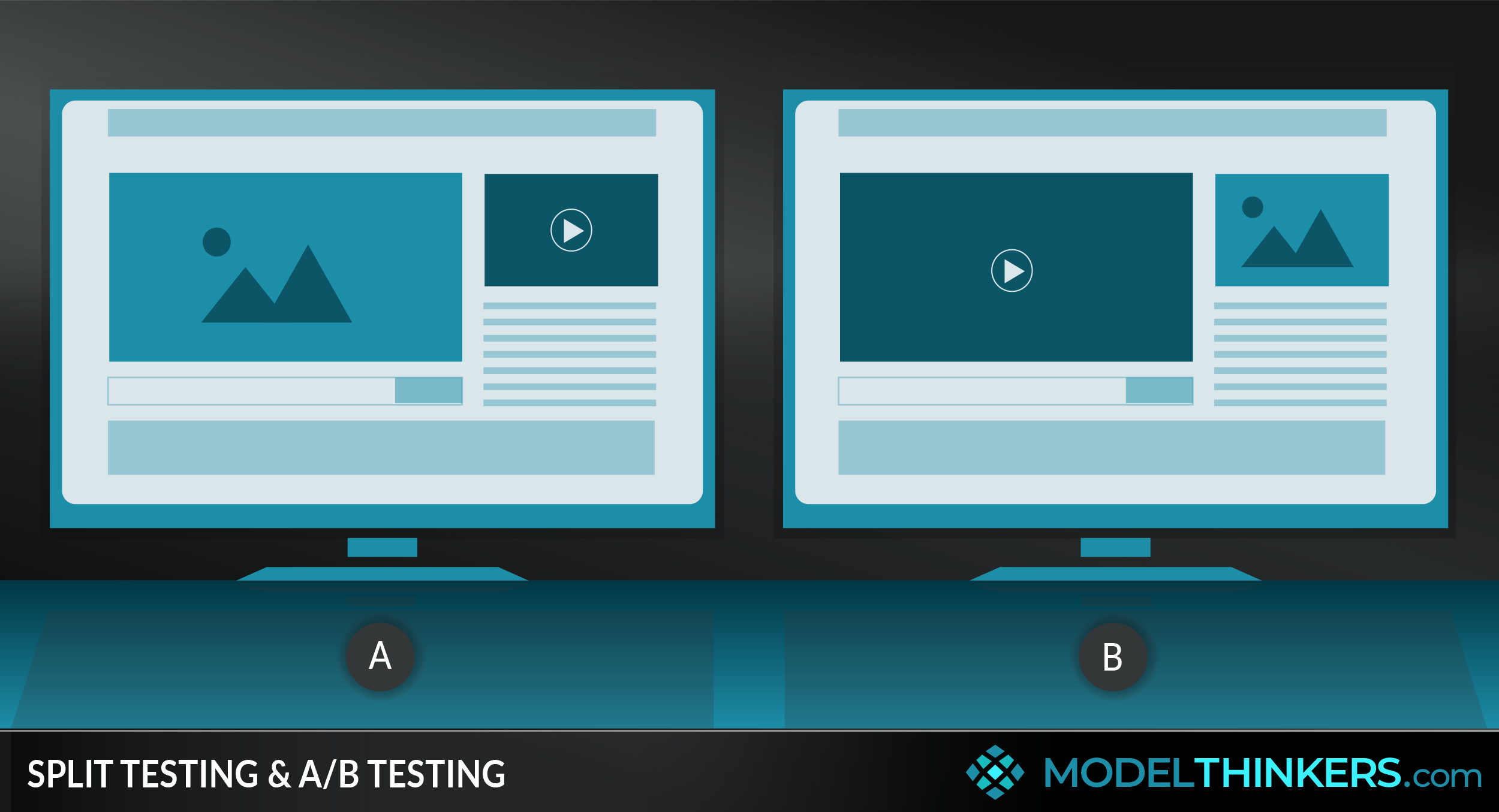

Split Testing and A/B Testing are methods to predict and understand audience reactions to alternative approaches or solutions.

THE DIFFERENCE.

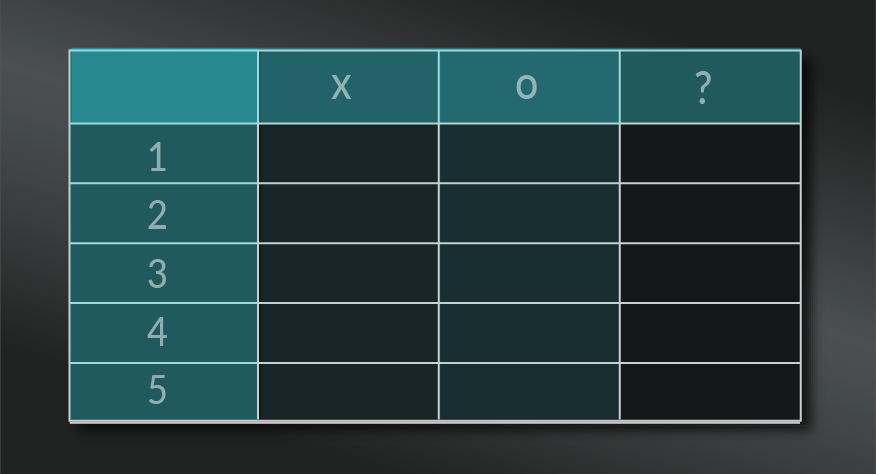

While the terms share a similar intention and are generally used interchangeably, there are differences.

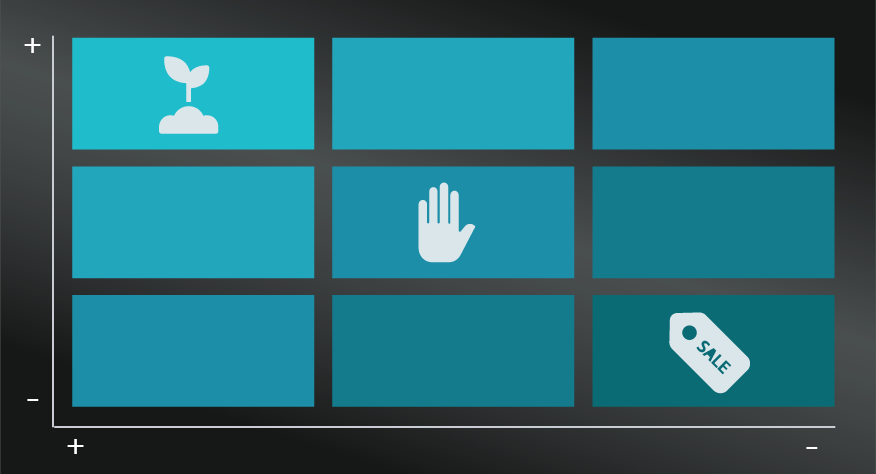

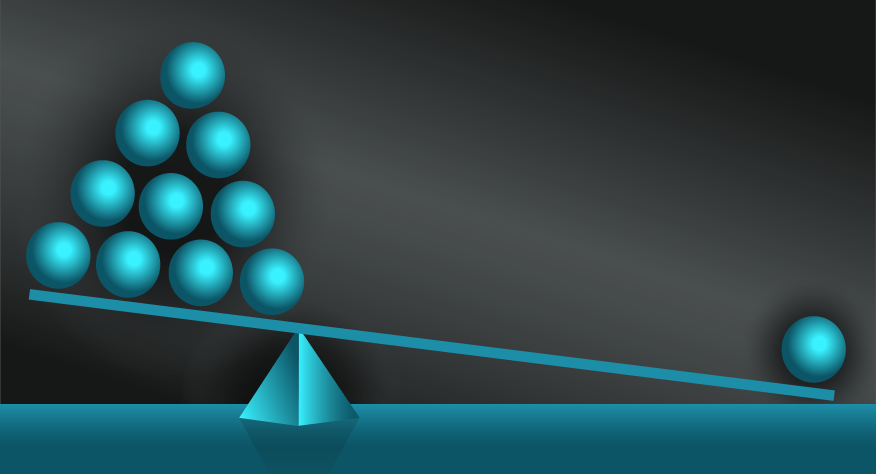

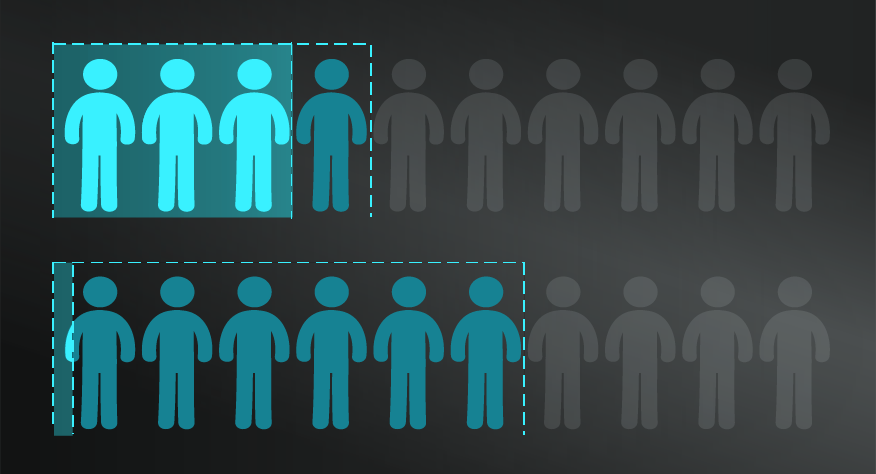

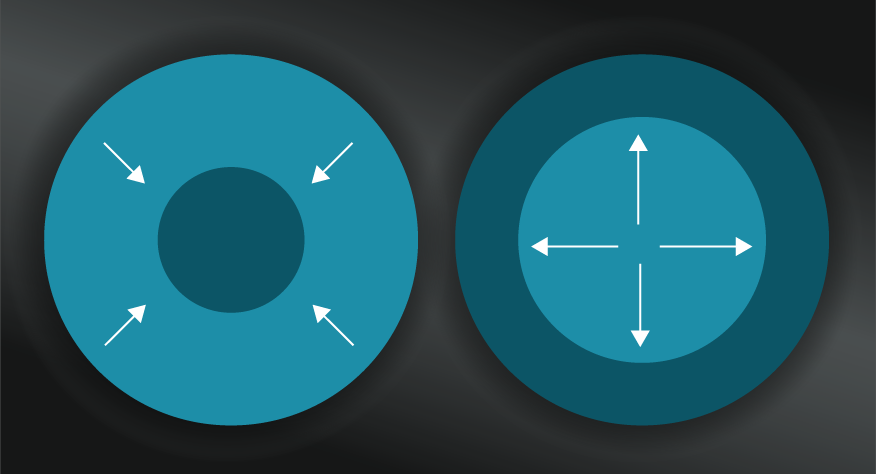

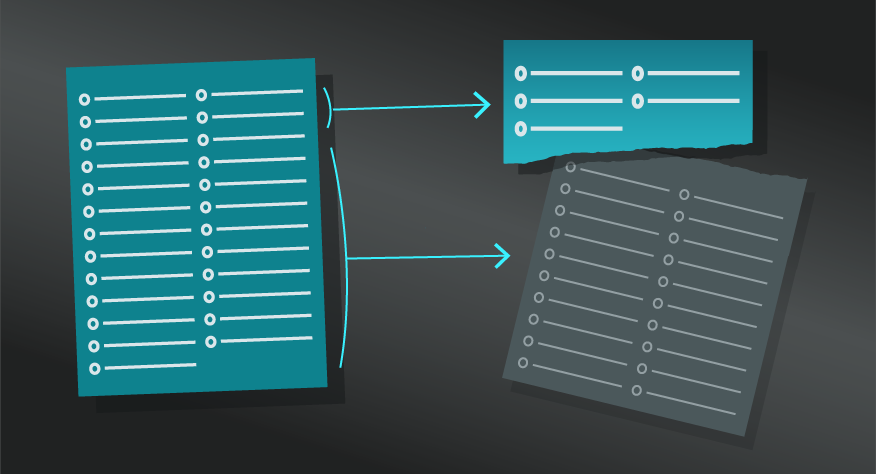

- Split testing splits an audience, often providing two distinct experiences with the aim of gathering feedback on which approach delivers desired behaviours and engagement.

- A/B testing uses a similar approach but tends to compare a single variable rather than the entire design approach.

APPLICATION.

While we've focused on customers and a business context, these models are both in part inspired by the Scientific Method and are used in data science to inform any and all domains — from practice in medicine, marketing, product development, behavioural economics, business and design. They are used to test hypotheses and provide data on effective practices.

In some such testing, one of the comparison groups might be a ‘control group’ which does not receive an intervention and becomes a benchmark to compare other interventions.

IN YOUR LATTICEWORK.

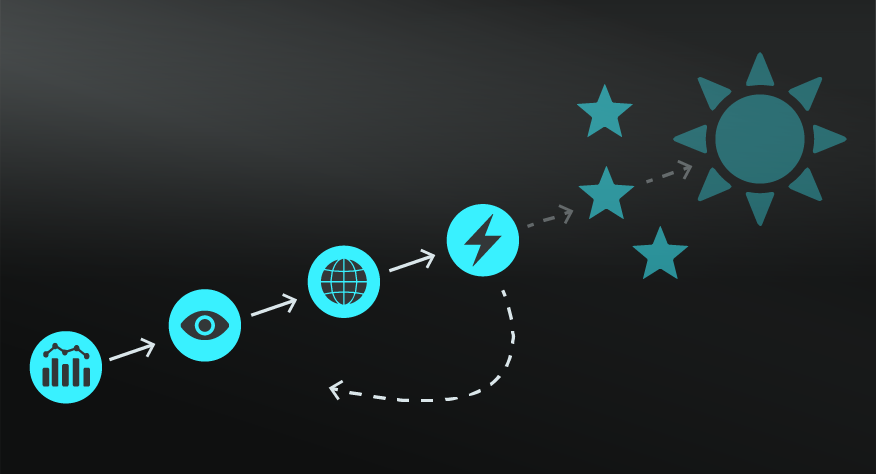

This model represents a powerful way to test and understand the impacts of various initiatives and your reality more broadly. In a business context, it works well with Minimum Viable Products and the Lean Startup.

Use it to challenge the Confirmation Heuristic and other biases and gain the data you need to work through complexity.

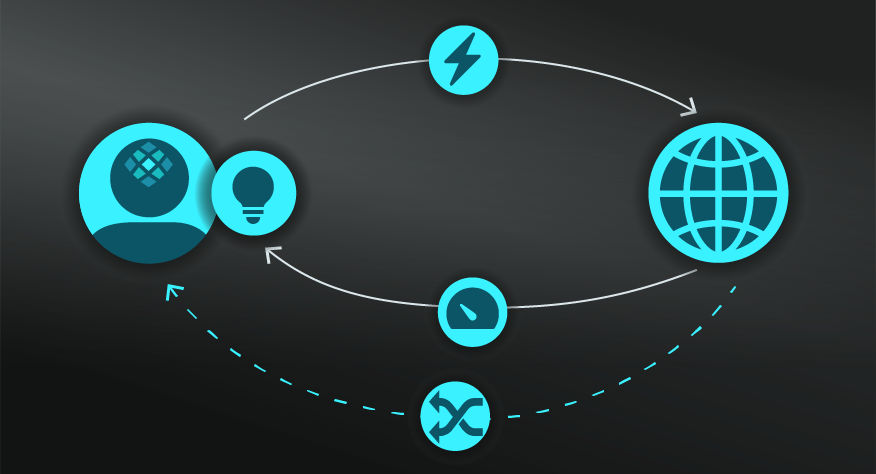

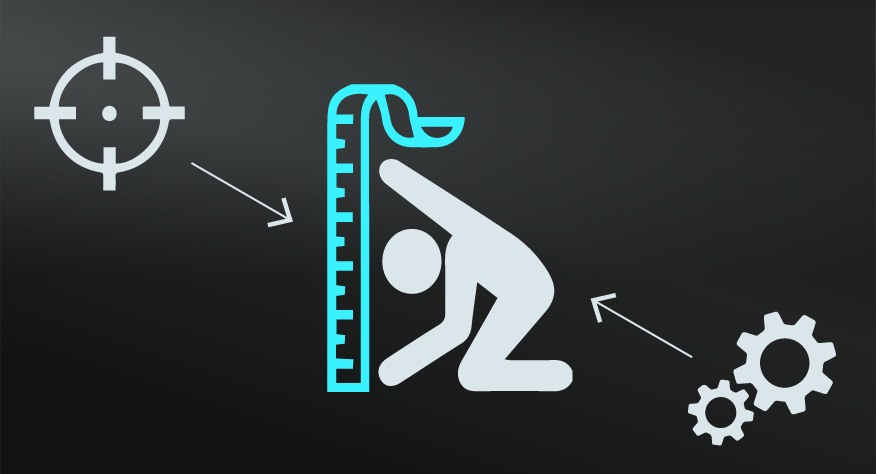

- Establish and test a hypothesis.

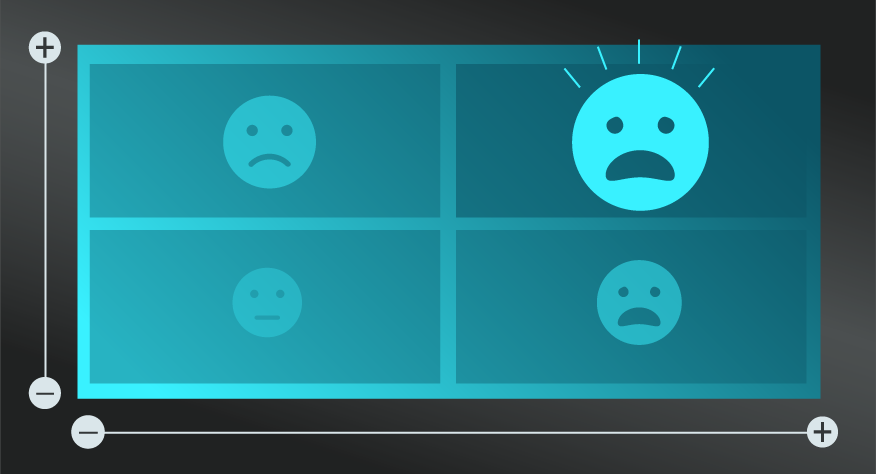

The point of split and A/B testing is to test a hypothesis. Your hypothesis should be clearly defined and might use a format such as PICOT which stands for population (which target segment you will examine), intervention (what you are testing), comparison (what you are comparing to), outcome (what you will measure), and time (at what point will you measure it).

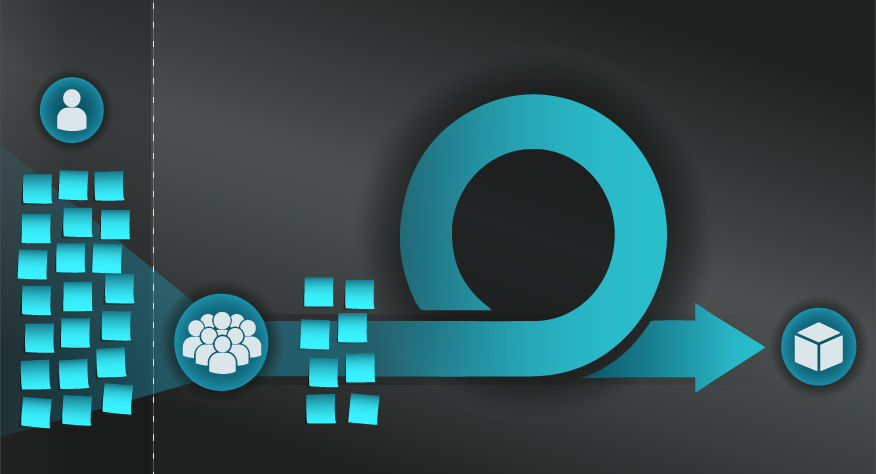

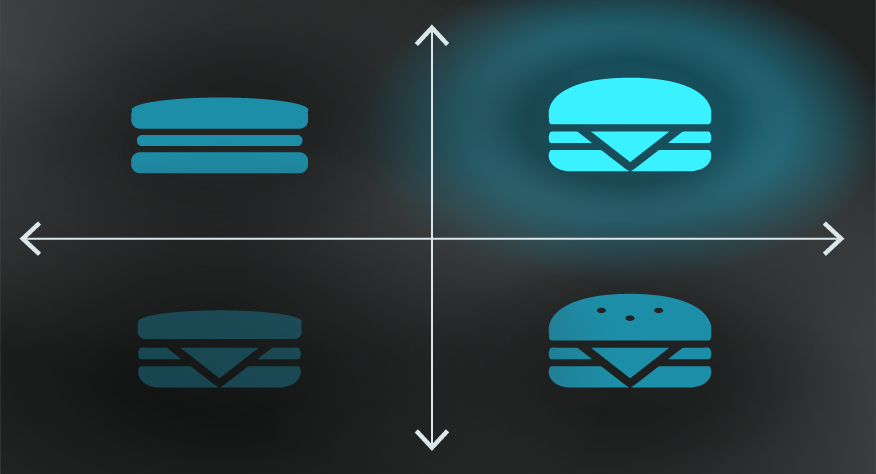

- Split Test before your A/B test.

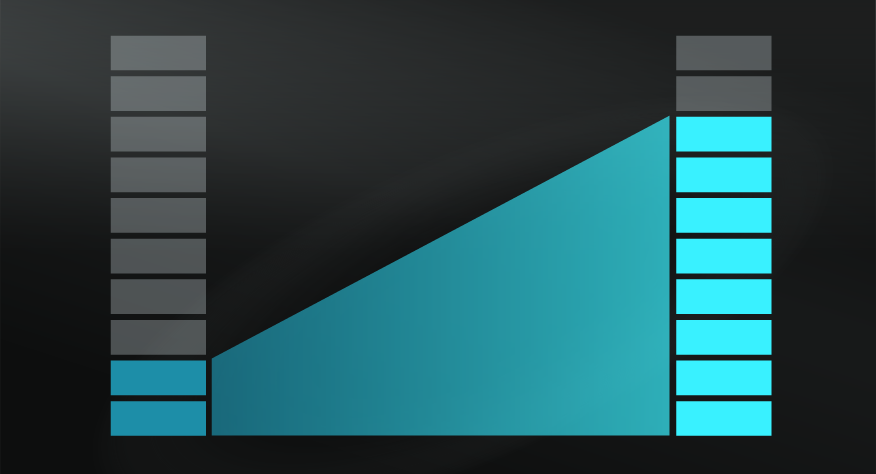

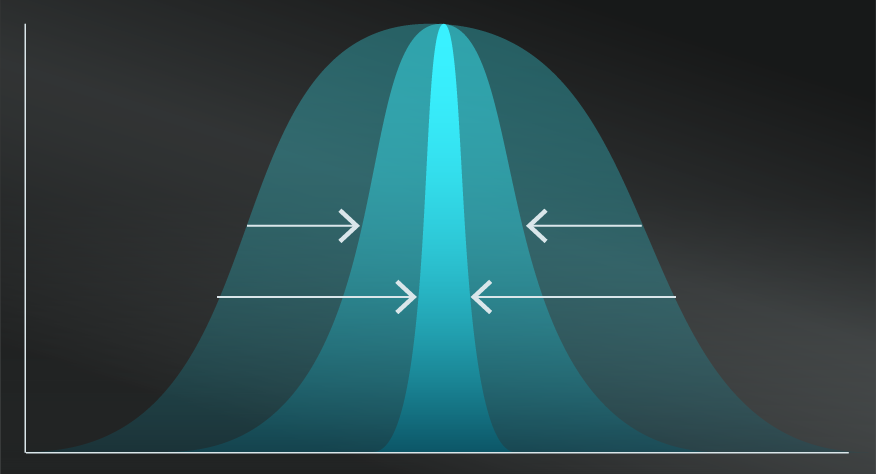

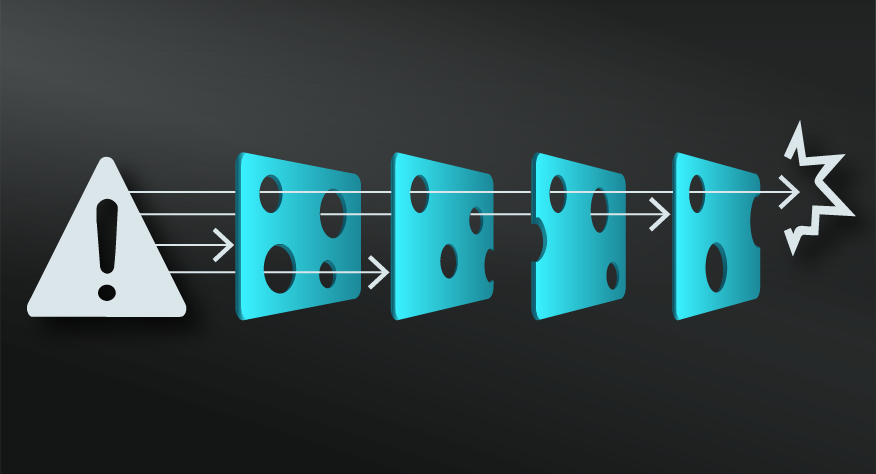

Split Testing is about testing two distinct approaches and should be run first to set direction. Once that direction is established, use A/B Testing to dig deeper into specific variables. With A/B Testing, pick one variable to test at a time. Multivariate testing considers multiple variables at once and is also a legitimate testing approach in some circumstances of large sample sizes.

- Ensure consistent sample groups.

Ensure that your sample groups are selected randomly and are statistically significant enough to demonstrate trends and not be skewed by a few opinionated individuals.

- Rember, always be testing

That’s the other name for A/B Testing - always be testing. It’s a reminder to continue to iterate, test and gather more data.

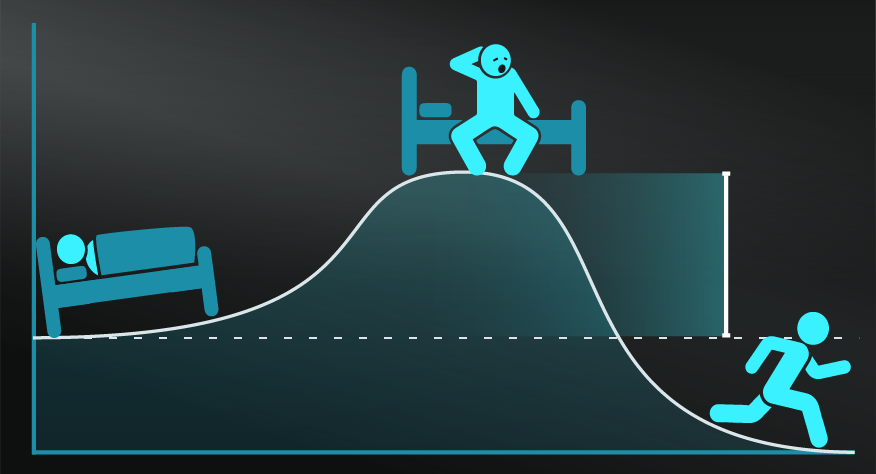

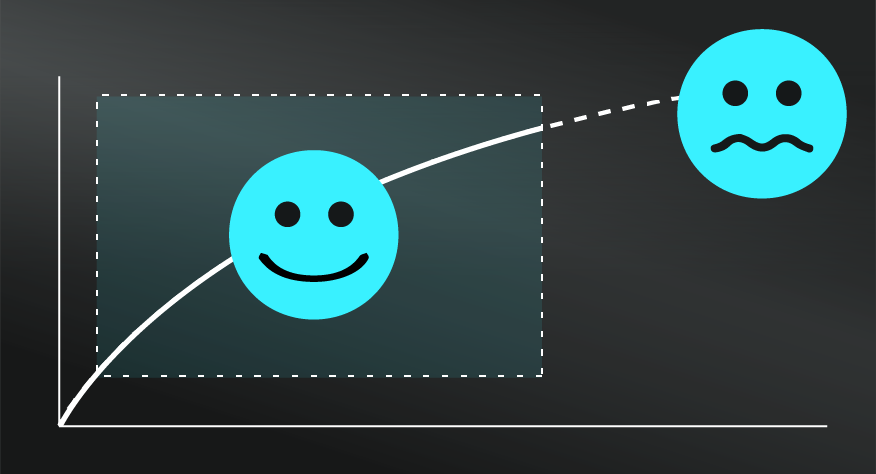

A common challenge with split and A/B Testing lies in jumping to early conclusions and not retesting. Often software supported testing provides real time visual feedback to managers who might be tempted to view early trends as finished results, even though they have low statistical significance. Similarly, once a pattern is established many organisations do not invest in retesting.

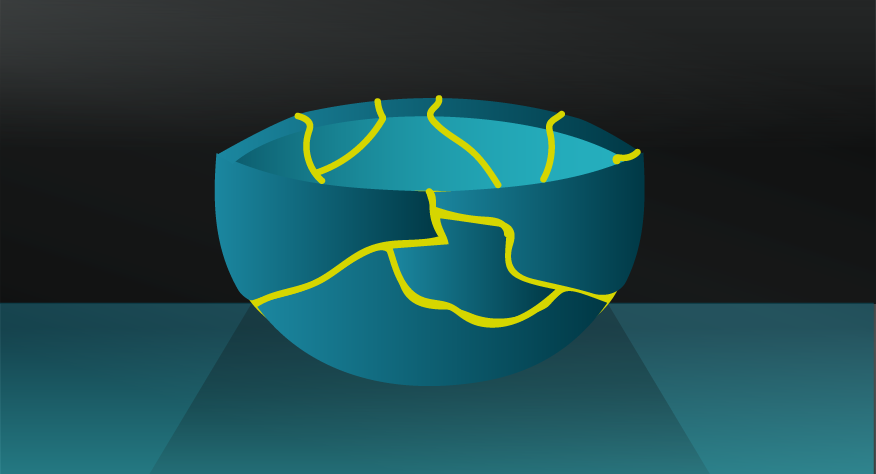

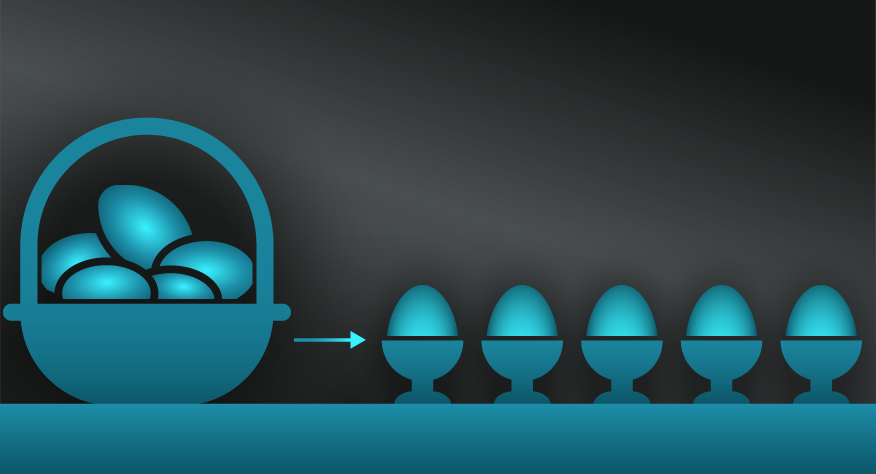

Fisher, tea and testing.

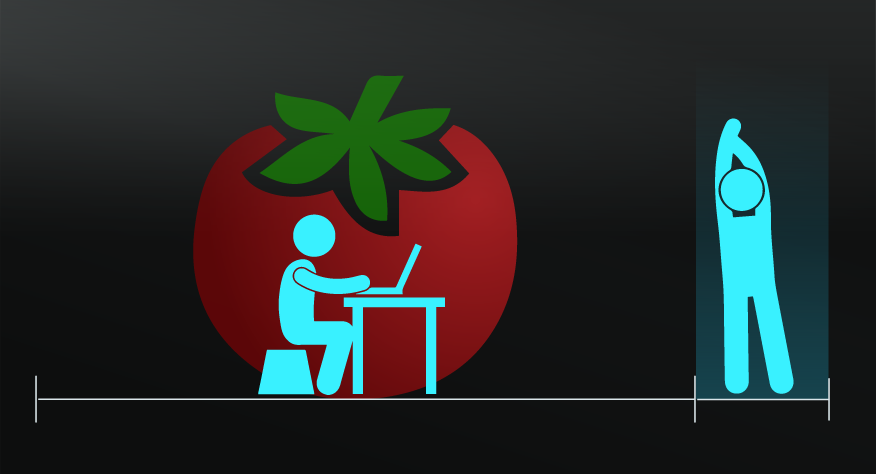

In 1920 biologist and statistician Ronald Fisher offered a colleague a cup of tea. She rejected the tea because he had added milk before the tea. After an argument about whether this would impact the taste, Fisher devised a test — pouring eight cups with four tea first and four milk first. To Fisher’s surprise his colleague was able to identify the difference every time.

Fisher went on to design a number of other experiments, including testing the impact of fertilizer on land, and wrote a book on the design of experiments. His significant contribution to scientific and statistical research was only shadowed by his disappointing views on race and sex, which we won’t go into here.

Google.

In 2000 Google ran their first A/B test to establish the optimum number of results to display on a search engine result page. Apparently that test was thwarted by slow loading times.

However Google has continued leveraging this approach with predictions that they ran over 7,000 A/B tests in 2011 alone. Other tech companies have indicated that they run up to 100,000 experiments each year

Split and A/B testing are aligned with scientific method and data science. They can be applied in a range of domains and are particularly popular in marketing, UX and behavioural science.

Use the following examples of connected and complementary models to weave split and A/B testing into your broader latticework of mental models. Alternatively, discover your own connections by exploring the category list above.

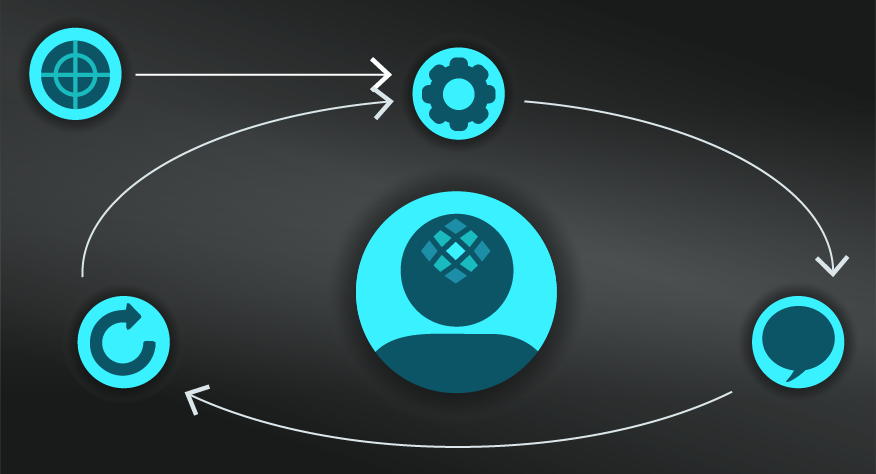

Connected models:

- Scientific method: essentially the same process.

- Benchmarking: in this cast benchmarking against a control rather than a best practice example.

- Lean startup: recommends split testing for real customer feedback rather than focus groups.

Complementary models:

- Bottleneck: identify areas of focus for A/B testing.

- Opportunity cost: such testing can help to put a figure against the opportunity cost related to design options.

Ronald Fisher, whose tea experiment is described in the In Practice section, was an early proponent for split and A/B testing, though the approach is thought to have pre-dates him considerably. Claude Hopkins is acknowledged as an early adopter in the field of marketing, running experiments with promotional coupons in the 1920s.

My Notes

My Notes

Oops, That’s Members’ Only!

Fortunately, it only costs US$5/month to Join ModelThinkers and access everything so that you can rapidly discover, learn, and apply the world’s most powerful ideas.

ModelThinkers membership at a glance:

“Yeah, we hate pop ups too. But we wanted to let you know that, with ModelThinkers, we’re making it easier for you to adapt, innovate and create value. We hope you’ll join us and the growing community of ModelThinkers today.”