0 saved

0 saved

18.4K views

18.4K views

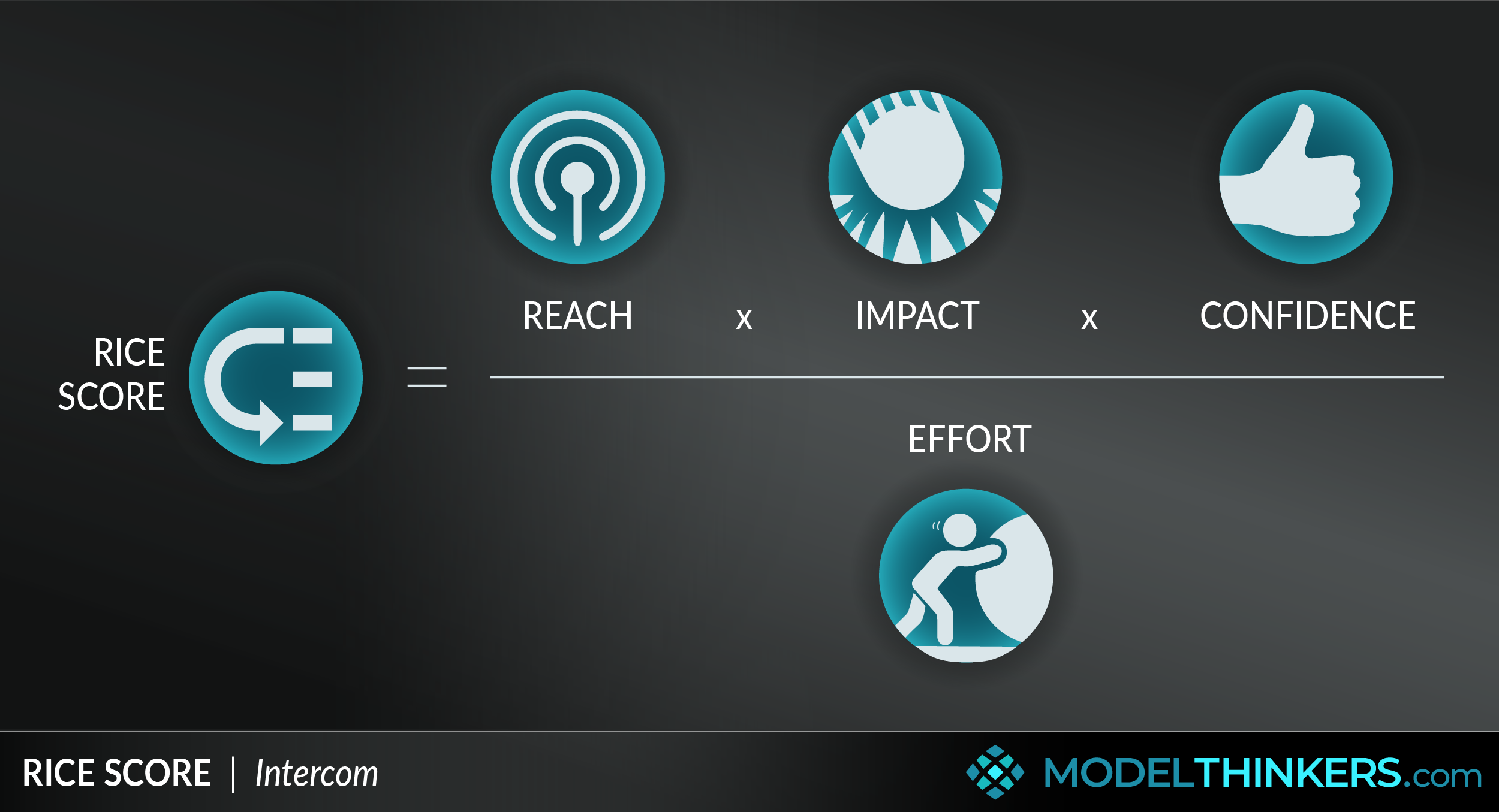

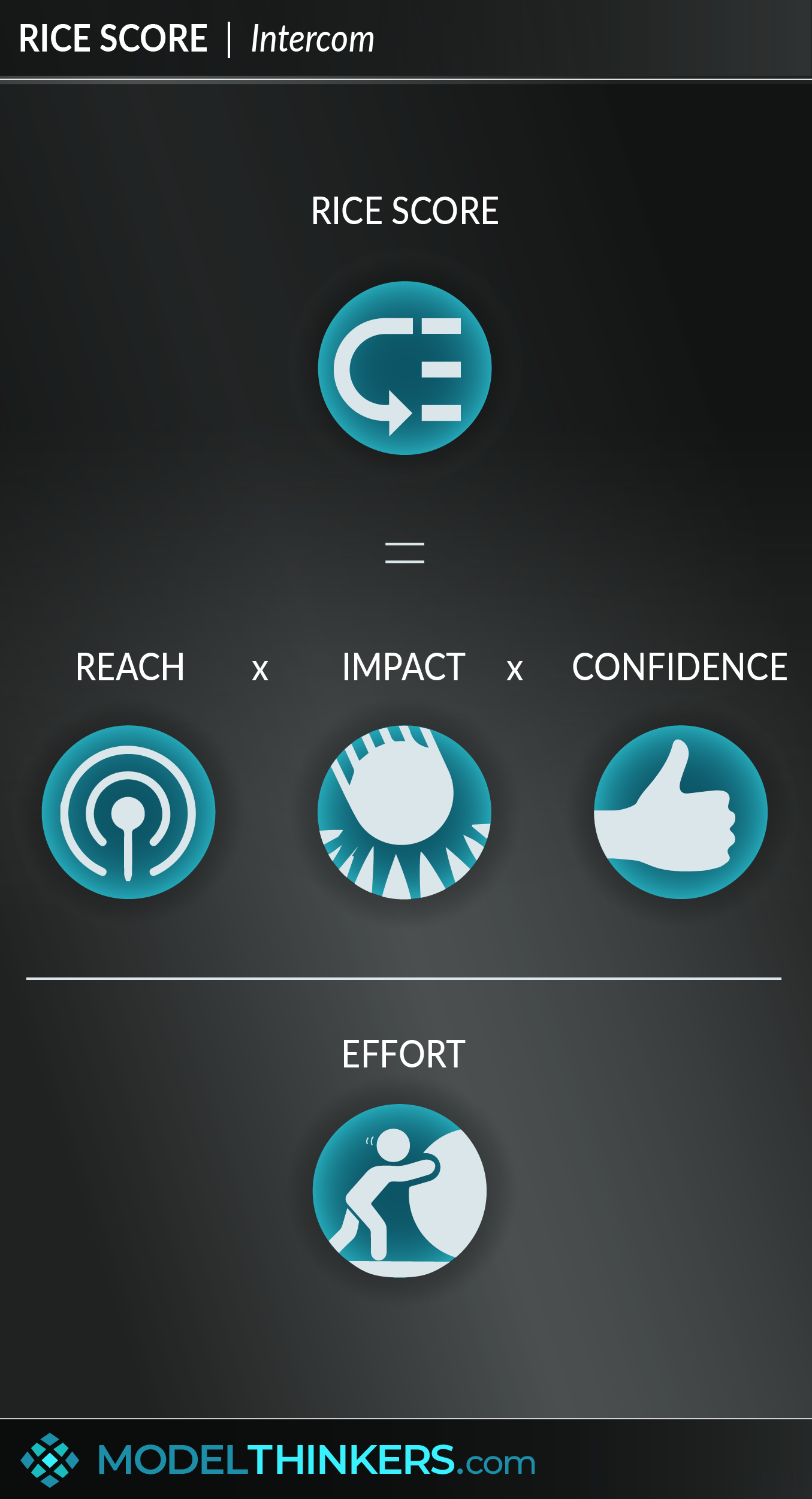

How do you choose which products or features to ship and which should remain in your backlog? RICE Scores are one way of making a call.

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat. Duis aute irure dolor in reprehenderit in voluptate velit esse cillum dolore eu fugiat nulla pariatur. Excepteur sint occaecat cupidatat non proident, sunt in culpa qui officia deserunt mollit anim id est laborum.Curabitur pretium tincidunt lacus. Nulla gravida orci a odio, et viverra justo commodo id. Aliquam in felis sit amet augue laoreet fringilla. Suspendisse potenti. Sed in libero ut nibh placerat accumsan. Proin ac libero euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Integer in libero ut justo cursus tincidunt. Sed vitae libero sit amet dolor tincidunt tincidunt. Aenean euismod, nisi vel consectetur interdum, nisl nisi cursus nisi, vitae tincidunt nisi nisl eget nisi. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo. Vivamus lacinia odio vitae vestibulum. Nulla facilisi. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Nam sit amet erat euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo. Integer in libero ut justo cursus tincidunt. Sed vitae libero sit amet dolor tincidunt tincidunt.

Aliquam in felis sit amet augue laoreet fringilla. Suspendisse potenti. Sed in libero ut nibh placerat accumsan. Proin ac libero euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Curabitur pretium tincidunt lacus. Nulla gravida orci a odio, et viverra justo commodo id. Aliquam in felis sit amet augue laoreet fringilla. Suspendisse potenti. Sed in libero ut nibh placerat accumsan. Proin ac libero euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Integer in libero ut justo cursus tincidunt. Sed vitae libero sit amet dolor tincidunt tincidunt. Aenean euismod, nisi vel consectetur interdum, nisl nisi cursus nisi, vitae tincidunt nisi nisl eget nisi. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo. Vivamus lacinia odio vitae vestibulum. Nulla facilisi. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Nam sit amet erat euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo. Integer in libero ut justo cursus tincidunt. Sed vitae libero sit amet dolor tincidunt tincidunt.

Aliquam in felis sit amet augue laoreet fringilla. Suspendisse potenti. Sed in libero ut nibh placerat accumsan. Proin ac libero euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Curabitur pretium tincidunt lacus. Nulla gravida orci a odio, et viverra justo commodo id. Aliquam in felis sit amet augue laoreet fringilla. Suspendisse potenti. Sed in libero ut nibh placerat accumsan. Proin ac libero euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Integer in libero ut justo cursus tincidunt. Sed vitae libero sit amet dolor tincidunt tincidunt. Aenean euismod, nisi vel consectetur interdum, nisl nisi cursus nisi, vitae tincidunt nisi nisl eget nisi. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo. Vivamus lacinia odio vitae vestibulum. Nulla facilisi. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Nam sit amet erat euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo. Integer in libero ut justo cursus tincidunt. Sed vitae libero sit amet dolor tincidunt tincidunt.

Aliquam in felis sit amet augue laoreet fringilla. Suspendisse potenti. Sed in libero ut nibh placerat accumsan. Proin ac libero euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Curabitur pretium tincidunt lacus. Nulla gravida orci a odio, et viverra justo commodo id. Aliquam in felis sit amet augue laoreet fringilla. Suspendisse potenti. Sed in libero ut nibh placerat accumsan. Proin ac libero euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Integer in libero ut justo cursus tincidunt. Sed vitae libero sit amet dolor tincidunt tincidunt. Aenean euismod, nisi vel consectetur interdum, nisl nisi cursus nisi, vitae tincidunt nisi nisl eget nisi. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo. Vivamus lacinia odio vitae vestibulum. Nulla facilisi. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Nam sit amet erat euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo. Integer in libero ut justo cursus tincidunt. Sed vitae libero sit amet dolor tincidunt tincidunt.

Aliquam in felis sit amet augue laoreet fringilla. Suspendisse potenti. Sed in libero ut nibh placerat accumsan. Proin ac libero euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Curabitur pretium tincidunt lacus. Nulla gravida orci a odio, et viverra justo commodo id. Aliquam in felis sit amet augue laoreet fringilla. Suspendisse potenti. Sed in libero ut nibh placerat accumsan. Proin ac libero euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Integer in libero ut justo cursus tincidunt. Sed vitae libero sit amet dolor tincidunt tincidunt. Aenean euismod, nisi vel consectetur interdum, nisl nisi cursus nisi, vitae tincidunt nisi nisl eget nisi. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo. Vivamus lacinia odio vitae vestibulum. Nulla facilisi. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Nam sit amet erat euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo. Integer in libero ut justo cursus tincidunt. Sed vitae libero sit amet dolor tincidunt tincidunt.

Aliquam in felis sit amet augue laoreet fringilla. Suspendisse potenti. Sed in libero ut nibh placerat accumsan. Proin ac libero euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Curabitur pretium tincidunt lacus. Nulla gravida orci a odio, et viverra justo commodo id. Aliquam in felis sit amet augue laoreet fringilla. Suspendisse potenti. Sed in libero ut nibh placerat accumsan. Proin ac libero euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Integer in libero ut justo cursus tincidunt. Sed vitae libero sit amet dolor tincidunt tincidunt. Aenean euismod, nisi vel consectetur interdum, nisl nisi cursus nisi, vitae tincidunt nisi nisl eget nisi. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo. Vivamus lacinia odio vitae vestibulum. Nulla facilisi. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Nam sit amet erat euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo. Integer in libero ut justo cursus tincidunt. Sed vitae libero sit amet dolor tincidunt tincidunt.

Aliquam in felis sit amet augue laoreet fringilla. Suspendisse potenti. Sed in libero ut nibh placerat accumsan. Proin ac libero euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Curabitur pretium tincidunt lacus. Nulla gravida orci a odio, et viverra justo commodo id. Aliquam in felis sit amet augue laoreet fringilla. Suspendisse potenti. Sed in libero ut nibh placerat accumsan. Proin ac libero euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Integer in libero ut justo cursus tincidunt. Sed vitae libero sit amet dolor tincidunt tincidunt. Aenean euismod, nisi vel consectetur interdum, nisl nisi cursus nisi, vitae tincidunt nisi nisl eget nisi. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo. Vivamus lacinia odio vitae vestibulum. Nulla facilisi. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Nam sit amet erat euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo. Integer in libero ut justo cursus tincidunt. Sed vitae libero sit amet dolor tincidunt tincidunt.

Aliquam in felis sit amet augue laoreet fringilla. Suspendisse potenti. Sed in libero ut nibh placerat accumsan. Proin ac libero euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Curabitur pretium tincidunt lacus. Nulla gravida orci a odio, et viverra justo commodo id. Aliquam in felis sit amet augue laoreet fringilla. Suspendisse potenti. Sed in libero ut nibh placerat accumsan. Proin ac libero euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Integer in libero ut justo cursus tincidunt. Sed vitae libero sit amet dolor tincidunt tincidunt. Aenean euismod, nisi vel consectetur interdum, nisl nisi cursus nisi, vitae tincidunt nisi nisl eget nisi. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo. Vivamus lacinia odio vitae vestibulum. Nulla facilisi. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Nam sit amet erat euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo. Integer in libero ut justo cursus tincidunt. Sed vitae libero sit amet dolor tincidunt tincidunt.

Aliquam in felis sit amet augue laoreet fringilla. Suspendisse potenti. Sed in libero ut nibh placerat accumsan. Proin ac libero euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Curabitur pretium tincidunt lacus. Nulla gravida orci a odio, et viverra justo commodo id. Aliquam in felis sit amet augue laoreet fringilla. Suspendisse potenti. Sed in libero ut nibh placerat accumsan. Proin ac libero euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Integer in libero ut justo cursus tincidunt. Sed vitae libero sit amet dolor tincidunt tincidunt. Aenean euismod, nisi vel consectetur interdum, nisl nisi cursus nisi, vitae tincidunt nisi nisl eget nisi. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo. Vivamus lacinia odio vitae vestibulum. Nulla facilisi. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Nam sit amet erat euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo. Integer in libero ut justo cursus tincidunt. Sed vitae libero sit amet dolor tincidunt tincidunt.

Aliquam in felis sit amet augue laoreet fringilla. Suspendisse potenti. Sed in libero ut nibh placerat accumsan. Proin ac libero euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Curabitur pretium tincidunt lacus. Nulla gravida orci a odio, et viverra justo commodo id. Aliquam in felis sit amet augue laoreet fringilla. Suspendisse potenti. Sed in libero ut nibh placerat accumsan. Proin ac libero euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Integer in libero ut justo cursus tincidunt. Sed vitae libero sit amet dolor tincidunt tincidunt. Aenean euismod, nisi vel consectetur interdum, nisl nisi cursus nisi, vitae tincidunt nisi nisl eget nisi. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo. Vivamus lacinia odio vitae vestibulum. Nulla facilisi. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Nam sit amet erat euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo. Integer in libero ut justo cursus tincidunt. Sed vitae libero sit amet dolor tincidunt tincidunt.

Aliquam in felis sit amet augue laoreet fringilla. Suspendisse potenti. Sed in libero ut nibh placerat accumsan. Proin ac libero euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Curabitur pretium tincidunt lacus. Nulla gravida orci a odio, et viverra justo commodo id. Aliquam in felis sit amet augue laoreet fringilla. Suspendisse potenti. Sed in libero ut nibh placerat accumsan. Proin ac libero euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Integer in libero ut justo cursus tincidunt. Sed vitae libero sit amet dolor tincidunt tincidunt. Aenean euismod, nisi vel consectetur interdum, nisl nisi cursus nisi, vitae tincidunt nisi nisl eget nisi. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo. Vivamus lacinia odio vitae vestibulum. Nulla facilisi. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Nam sit amet erat euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo. Integer in libero ut justo cursus tincidunt. Sed vitae libero sit amet dolor tincidunt tincidunt.

Aliquam in felis sit amet augue laoreet fringilla. Suspendisse potenti. Sed in libero ut nibh placerat accumsan. Proin ac libero euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Curabitur pretium tincidunt lacus. Nulla gravida orci a odio, et viverra justo commodo id. Aliquam in felis sit amet augue laoreet fringilla. Suspendisse potenti. Sed in libero ut nibh placerat accumsan. Proin ac libero euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Integer in libero ut justo cursus tincidunt. Sed vitae libero sit amet dolor tincidunt tincidunt. Aenean euismod, nisi vel consectetur interdum, nisl nisi cursus nisi, vitae tincidunt nisi nisl eget nisi. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo. Vivamus lacinia odio vitae vestibulum. Nulla facilisi. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Nam sit amet erat euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo. Integer in libero ut justo cursus tincidunt. Sed vitae libero sit amet dolor tincidunt tincidunt.

Aliquam in felis sit amet augue laoreet fringilla. Suspendisse potenti. Sed in libero ut nibh placerat accumsan. Proin ac libero euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Curabitur pretium tincidunt lacus. Nulla gravida orci a odio, et viverra justo commodo id. Aliquam in felis sit amet augue laoreet fringilla. Suspendisse potenti. Sed in libero ut nibh placerat accumsan. Proin ac libero euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Integer in libero ut justo cursus tincidunt. Sed vitae libero sit amet dolor tincidunt tincidunt. Aenean euismod, nisi vel consectetur interdum, nisl nisi cursus nisi, vitae tincidunt nisi nisl eget nisi. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo. Vivamus lacinia odio vitae vestibulum. Nulla facilisi. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Nam sit amet erat euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo. Integer in libero ut justo cursus tincidunt. Sed vitae libero sit amet dolor tincidunt tincidunt.

Aliquam in felis sit amet augue laoreet fringilla. Suspendisse potenti. Sed in libero ut nibh placerat accumsan. Proin ac libero euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Curabitur pretium tincidunt lacus. Nulla gravida orci a odio, et viverra justo commodo id. Aliquam in felis sit amet augue laoreet fringilla. Suspendisse potenti. Sed in libero ut nibh placerat accumsan. Proin ac libero euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Integer in libero ut justo cursus tincidunt. Sed vitae libero sit amet dolor tincidunt tincidunt. Aenean euismod, nisi vel consectetur interdum, nisl nisi cursus nisi, vitae tincidunt nisi nisl eget nisi. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo. Vivamus lacinia odio vitae vestibulum. Nulla facilisi. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Nam sit amet erat euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo. Integer in libero ut justo cursus tincidunt. Sed vitae libero sit amet dolor tincidunt tincidunt.

Aliquam in felis sit amet augue laoreet fringilla. Suspendisse potenti. Sed in libero ut nibh placerat accumsan. Proin ac libero euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Curabitur pretium tincidunt lacus. Nulla gravida orci a odio, et viverra justo commodo id. Aliquam in felis sit amet augue laoreet fringilla. Suspendisse potenti. Sed in libero ut nibh placerat accumsan. Proin ac libero euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Integer in libero ut justo cursus tincidunt. Sed vitae libero sit amet dolor tincidunt tincidunt. Aenean euismod, nisi vel consectetur interdum, nisl nisi cursus nisi, vitae tincidunt nisi nisl eget nisi. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo. Vivamus lacinia odio vitae vestibulum. Nulla facilisi. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Nam sit amet erat euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo. Integer in libero ut justo cursus tincidunt. Sed vitae libero sit amet dolor tincidunt tincidunt.

Aliquam in felis sit amet augue laoreet fringilla. Suspendisse potenti. Sed in libero ut nibh placerat accumsan. Proin ac libero euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Curabitur pretium tincidunt lacus. Nulla gravida orci a odio, et viverra justo commodo id. Aliquam in felis sit amet augue laoreet fringilla. Suspendisse potenti. Sed in libero ut nibh placerat accumsan. Proin ac libero euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Integer in libero ut justo cursus tincidunt. Sed vitae libero sit amet dolor tincidunt tincidunt. Aenean euismod, nisi vel consectetur interdum, nisl nisi cursus nisi, vitae tincidunt nisi nisl eget nisi. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo. Vivamus lacinia odio vitae vestibulum. Nulla facilisi. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Nam sit amet erat euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo. Integer in libero ut justo cursus tincidunt. Sed vitae libero sit amet dolor tincidunt tincidunt.

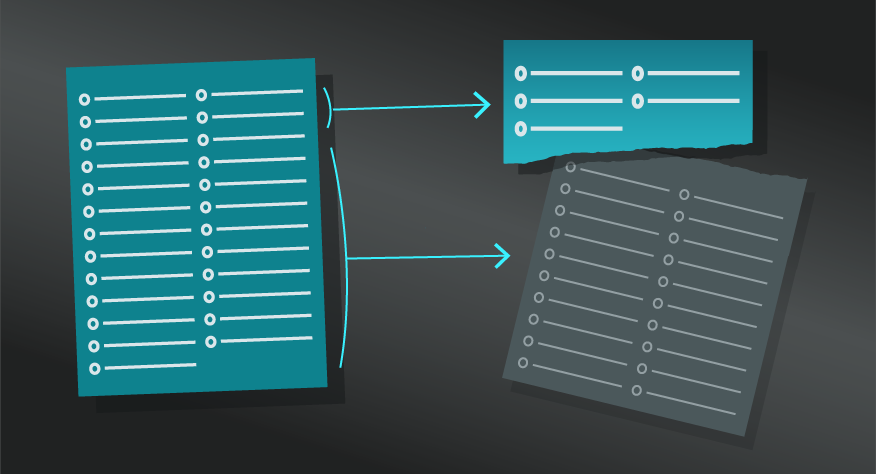

- Identify the product or feature under consideration.

This might be part of a backlog or from a bra ...

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat. Duis aute irure dolor in reprehenderit in voluptate velit esse cillum dolore eu fugiat nulla pariatur. Excepteur sint occaecat cupidatat non proident, sunt in culpa qui officia deserunt mollit anim id est laborum.

Curabitur pretium tincidunt lacus. Nulla gravida orci a odio, et viverra justo commodo id. Aliquam in felis sit amet augue laoreet fringilla. Suspendisse potenti. Sed in libero ut nibh placerat accumsan. Proin ac libero euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Integer in libero ut justo cursus tincidunt. Sed vitae libero sit amet dolor tincidunt tincidunt. Aenean euismod, nisi vel consectetur interdum, nisl nisi cursus nisi, vitae tincidunt nisi nisl eget nisi. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo. Vivamus lacinia odio vitae vestibulum. Nulla facilisi. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Nam sit amet erat euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo. Integer in libero ut justo cursus tincidunt. Sed vitae libero sit amet dolor tincidunt tincidunt.

Aliquam in felis sit amet augue laoreet fringilla. Suspendisse potenti. Sed in libero ut nibh placerat accumsan. Proin ac libero euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Curabitur pretium tincidunt lacus. Nulla gravida orci a odio, et viverra justo commodo id. Aliquam in felis sit amet augue laoreet fringilla. Suspendisse potenti. Sed in libero ut nibh placerat accumsan. Proin ac libero euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Integer in libero ut justo cursus tincidunt. Sed vitae libero sit amet dolor tincidunt tincidunt. Aenean euismod, nisi vel consectetur interdum, nisl nisi cursus nisi, vitae tincidunt nisi nisl eget nisi. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo. Vivamus lacinia odio vitae vestibulum. Nulla facilisi. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Nam sit amet erat euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo. Integer in libero ut justo cursus tincidunt. Sed vitae libero sit amet dolor tincidunt tincidunt.

Aliquam in felis sit amet augue laoreet fringilla. Suspendisse potenti. Sed in libero ut nibh placerat accumsan. Proin ac libero euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Curabitur pretium tincidunt lacus. Nulla gravida orci a odio, et viverra justo commodo id. Aliquam in felis sit amet augue laoreet fringilla. Suspendisse potenti. Sed in libero ut nibh placerat accumsan. Proin ac libero euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Integer in libero ut justo cursus tincidunt. Sed vitae libero sit amet dolor tincidunt tincidunt. Aenean euismod, nisi vel consectetur interdum, nisl nisi cursus nisi, vitae tincidunt nisi nisl eget nisi. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo. Vivamus lacinia odio vitae vestibulum. Nulla facilisi. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Nam sit amet erat euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo. Integer in libero ut justo cursus tincidunt. Sed vitae libero sit amet dolor tincidunt tincidunt.

Aliquam in felis sit amet augue laoreet fringilla. Suspendisse potenti. Sed in libero ut nibh placerat accumsan. Proin ac libero euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Curabitur pretium tincidunt lacus. Nulla gravida orci a odio, et viverra justo commodo id. Aliquam in felis sit amet augue laoreet fringilla. Suspendisse potenti. Sed in libero ut nibh placerat accumsan. Proin ac libero euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Integer in libero ut justo cursus tincidunt. Sed vitae libero sit amet dolor tincidunt tincidunt. Aenean euismod, nisi vel consectetur interdum, nisl nisi cursus nisi, vitae tincidunt nisi nisl eget nisi. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo. Vivamus lacinia odio vitae vestibulum. Nulla facilisi. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Nam sit amet erat euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo. Integer in libero ut justo cursus tincidunt. Sed vitae libero sit amet dolor tincidunt tincidunt.

Aliquam in felis sit amet augue laoreet fringilla. Suspendisse potenti. Sed in libero ut nibh placerat accumsan. Proin ac libero euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Curabitur pretium tincidunt lacus. Nulla gravida orci a odio, et viverra justo commodo id. Aliquam in felis sit amet augue laoreet fringilla. Suspendisse potenti. Sed in libero ut nibh placerat accumsan. Proin ac libero euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Integer in libero ut justo cursus tincidunt. Sed vitae libero sit amet dolor tincidunt tincidunt. Aenean euismod, nisi vel consectetur interdum, nisl nisi cursus nisi, vitae tincidunt nisi nisl eget nisi. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo. Vivamus lacinia odio vitae vestibulum. Nulla facilisi. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Nam sit amet erat euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo. Integer in libero ut justo cursus tincidunt. Sed vitae libero sit amet dolor tincidunt tincidunt.

Aliquam in felis sit amet augue laoreet fringilla. Suspendisse potenti. Sed in libero ut nibh placerat accumsan. Proin ac libero euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Curabitur pretium tincidunt lacus. Nulla gravida orci a odio, et viverra justo commodo id. Aliquam in felis sit amet augue laoreet fringilla. Suspendisse potenti. Sed in libero ut nibh placerat accumsan. Proin ac libero euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Integer in libero ut justo cursus tincidunt. Sed vitae libero sit amet dolor tincidunt tincidunt. Aenean euismod, nisi vel consectetur interdum, nisl nisi cursus nisi, vitae tincidunt nisi nisl eget nisi. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo. Vivamus lacinia odio vitae vestibulum. Nulla facilisi. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Nam sit amet erat euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo. Integer in libero ut justo cursus tincidunt. Sed vitae libero sit amet dolor tincidunt tincidunt.

Aliquam in felis sit amet augue laoreet fringilla. Suspendisse potenti. Sed in libero ut nibh placerat accumsan. Proin ac libero euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Curabitur pretium tincidunt lacus. Nulla gravida orci a odio, et viverra justo commodo id. Aliquam in felis sit amet augue laoreet fringilla. Suspendisse potenti. Sed in libero ut nibh placerat accumsan. Proin ac libero euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Integer in libero ut justo cursus tincidunt. Sed vitae libero sit amet dolor tincidunt tincidunt. Aenean euismod, nisi vel consectetur interdum, nisl nisi cursus nisi, vitae tincidunt nisi nisl eget nisi. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo. Vivamus lacinia odio vitae vestibulum. Nulla facilisi. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Nam sit amet erat euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo. Integer in libero ut justo cursus tincidunt. Sed vitae libero sit amet dolor tincidunt tincidunt.

Aliquam in felis sit amet augue laoreet fringilla. Suspendisse potenti. Sed in libero ut nibh placerat accumsan. Proin ac libero euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Curabitur pretium tincidunt lacus. Nulla gravida orci a odio, et viverra justo commodo id. Aliquam in felis sit amet augue laoreet fringilla. Suspendisse potenti. Sed in libero ut nibh placerat accumsan. Proin ac libero euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Integer in libero ut justo cursus tincidunt. Sed vitae libero sit amet dolor tincidunt tincidunt. Aenean euismod, nisi vel consectetur interdum, nisl nisi cursus nisi, vitae tincidunt nisi nisl eget nisi. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo. Vivamus lacinia odio vitae vestibulum. Nulla facilisi. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Nam sit amet erat euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo. Integer in libero ut justo cursus tincidunt. Sed vitae libero sit amet dolor tincidunt tincidunt.

Aliquam in felis sit amet augue laoreet fringilla. Suspendisse potenti. Sed in libero ut nibh placerat accumsan. Proin ac libero euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Curabitur pretium tincidunt lacus. Nulla gravida orci a odio, et viverra justo commodo id. Aliquam in felis sit amet augue laoreet fringilla. Suspendisse potenti. Sed in libero ut nibh placerat accumsan. Proin ac libero euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Integer in libero ut justo cursus tincidunt. Sed vitae libero sit amet dolor tincidunt tincidunt. Aenean euismod, nisi vel consectetur interdum, nisl nisi cursus nisi, vitae tincidunt nisi nisl eget nisi. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo. Vivamus lacinia odio vitae vestibulum. Nulla facilisi. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Nam sit amet erat euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo. Integer in libero ut justo cursus tincidunt. Sed vitae libero sit amet dolor tincidunt tincidunt.

Aliquam in felis sit amet augue laoreet fringilla. Suspendisse potenti. Sed in libero ut nibh placerat accumsan. Proin ac libero euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Curabitur pretium tincidunt lacus. Nulla gravida orci a odio, et viverra justo commodo id. Aliquam in felis sit amet augue laoreet fringilla. Suspendisse potenti. Sed in libero ut nibh placerat accumsan. Proin ac libero euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Integer in libero ut justo cursus tincidunt. Sed vitae libero sit amet dolor tincidunt tincidunt. Aenean euismod, nisi vel consectetur interdum, nisl nisi cursus nisi, vitae tincidunt nisi nisl eget nisi. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo. Vivamus lacinia odio vitae vestibulum. Nulla facilisi. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Nam sit amet erat euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo. Integer in libero ut justo cursus tincidunt. Sed vitae libero sit amet dolor tincidunt tincidunt.

Aliquam in felis sit amet augue laoreet fringilla. Suspendisse potenti. Sed in libero ut nibh placerat accumsan. Proin ac libero euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Curabitur pretium tincidunt lacus. Nulla gravida orci a odio, et viverra justo commodo id. Aliquam in felis sit amet augue laoreet fringilla. Suspendisse potenti. Sed in libero ut nibh placerat accumsan. Proin ac libero euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Integer in libero ut justo cursus tincidunt. Sed vitae libero sit amet dolor tincidunt tincidunt. Aenean euismod, nisi vel consectetur interdum, nisl nisi cursus nisi, vitae tincidunt nisi nisl eget nisi. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo. Vivamus lacinia odio vitae vestibulum. Nulla facilisi. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Nam sit amet erat euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo. Integer in libero ut justo cursus tincidunt. Sed vitae libero sit amet dolor tincidunt tincidunt.

Aliquam in felis sit amet augue laoreet fringilla. Suspendisse potenti. Sed in libero ut nibh placerat accumsan. Proin ac libero euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Curabitur pretium tincidunt lacus. Nulla gravida orci a odio, et viverra justo commodo id. Aliquam in felis sit amet augue laoreet fringilla. Suspendisse potenti. Sed in libero ut nibh placerat accumsan. Proin ac libero euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Integer in libero ut justo cursus tincidunt. Sed vitae libero sit amet dolor tincidunt tincidunt. Aenean euismod, nisi vel consectetur interdum, nisl nisi cursus nisi, vitae tincidunt nisi nisl eget nisi. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo. Vivamus lacinia odio vitae vestibulum. Nulla facilisi. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Nam sit amet erat euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo. Integer in libero ut justo cursus tincidunt. Sed vitae libero sit amet dolor tincidunt tincidunt.

Aliquam in felis sit amet augue laoreet fringilla. Suspendisse potenti. Sed in libero ut nibh placerat accumsan. Proin ac libero euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Curabitur pretium tincidunt lacus. Nulla gravida orci a odio, et viverra justo commodo id. Aliquam in felis sit amet augue laoreet fringilla. Suspendisse potenti. Sed in libero ut nibh placerat accumsan. Proin ac libero euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Integer in libero ut justo cursus tincidunt. Sed vitae libero sit amet dolor tincidunt tincidunt. Aenean euismod, nisi vel consectetur interdum, nisl nisi cursus nisi, vitae tincidunt nisi nisl eget nisi. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo. Vivamus lacinia odio vitae vestibulum. Nulla facilisi. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Nam sit amet erat euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo. Integer in libero ut justo cursus tincidunt. Sed vitae libero sit amet dolor tincidunt tincidunt.

Aliquam in felis sit amet augue laoreet fringilla. Suspendisse potenti. Sed in libero ut nibh placerat accumsan. Proin ac libero euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Curabitur pretium tincidunt lacus. Nulla gravida orci a odio, et viverra justo commodo id. Aliquam in felis sit amet augue laoreet fringilla. Suspendisse potenti. Sed in libero ut nibh placerat accumsan. Proin ac libero euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Integer in libero ut justo cursus tincidunt. Sed vitae libero sit amet dolor tincidunt tincidunt. Aenean euismod, nisi vel consectetur interdum, nisl nisi cursus nisi, vitae tincidunt nisi nisl eget nisi. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo. Vivamus lacinia odio vitae vestibulum. Nulla facilisi. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Nam sit amet erat euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo. Integer in libero ut justo cursus tincidunt. Sed vitae libero sit amet dolor tincidunt tincidunt.

Aliquam in felis sit amet augue laoreet fringilla. Suspendisse potenti. Sed in libero ut nibh placerat accumsan. Proin ac libero euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Curabitur pretium tincidunt lacus. Nulla gravida orci a odio, et viverra justo commodo id. Aliquam in felis sit amet augue laoreet fringilla. Suspendisse potenti. Sed in libero ut nibh placerat accumsan. Proin ac libero euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Integer in libero ut justo cursus tincidunt. Sed vitae libero sit amet dolor tincidunt tincidunt. Aenean euismod, nisi vel consectetur interdum, nisl nisi cursus nisi, vitae tincidunt nisi nisl eget nisi. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo. Vivamus lacinia odio vitae vestibulum. Nulla facilisi. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Nam sit amet erat euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo. Integer in libero ut justo cursus tincidunt. Sed vitae libero sit amet dolor tincidunt tincidunt.

Aliquam in felis sit amet augue laoreet fringilla. Suspendisse potenti. Sed in libero ut nibh placerat accumsan. Proin ac libero euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Curabitur pretium tincidunt lacus. Nulla gravida orci a odio, et viverra justo commodo id. Aliquam in felis sit amet augue laoreet fringilla. Suspendisse potenti. Sed in libero ut nibh placerat accumsan. Proin ac libero euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Integer in libero ut justo cursus tincidunt. Sed vitae libero sit amet dolor tincidunt tincidunt. Aenean euismod, nisi vel consectetur interdum, nisl nisi cursus nisi, vitae tincidunt nisi nisl eget nisi. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo. Vivamus lacinia odio vitae vestibulum. Nulla facilisi. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo.

Nam sit amet erat euismod, tincidunt nisi a, tincidunt nunc. Sed sit amet ipsum non quam tincidunt tincidunt. Nulla facilisi. Donec vel libero nec justo tincidunt tincidunt. Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo. Integer in libero ut justo cursus tincidunt. Sed vitae libero sit amet dolor tincidunt tincidunt.

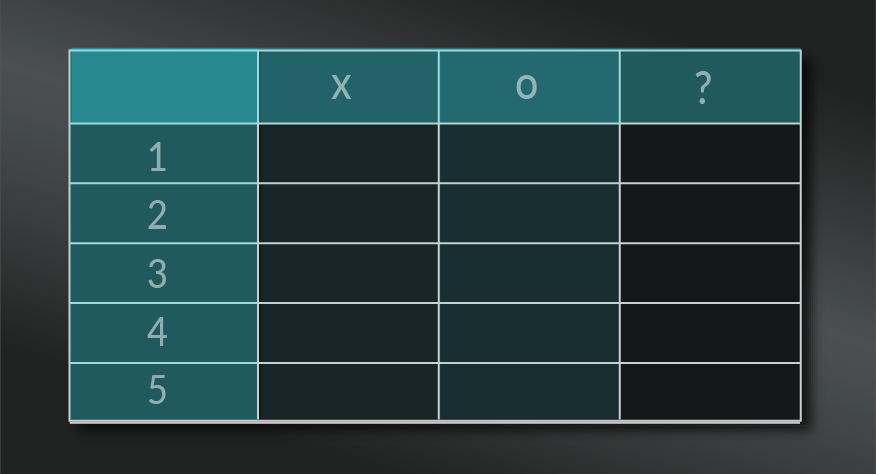

The elements behind RICE are still subjective and potentially influenced by biases, though the ‘confidence’ factor is a positive inclusion in this respect.

The ‘effort’ factor does not consider different values of people hours so, for example, there is no distinction between 1 week of senior developer time which might cost twice as much as 1 week of a junior designer.

Intercom.

The RICE Score was first developed by Intercom as a way to develop product priorities and their original post included this link to a spreadsheet example to establish RICE scores.

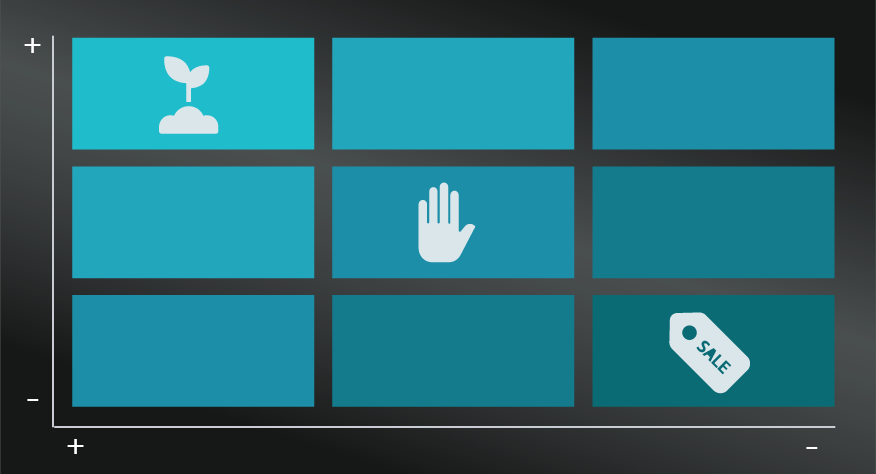

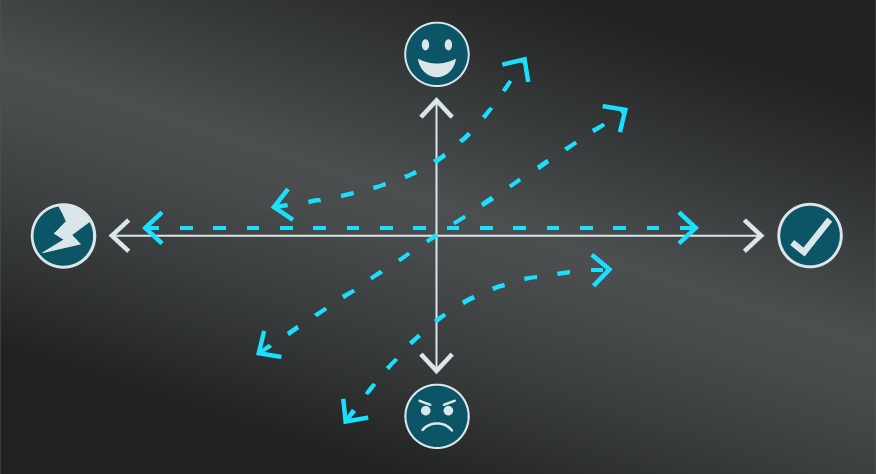

RICE scores are typically used by product managers to help prioritise products and product features, similar to the impact effort matrix or kano.

Use the following examples of connected and complementary models to weave RICE score into your broader latticework of mental models. Alternatively, discover your own connections by exploring the category list above.

Connected models:

- Impact effort matrix and kano are alternative prioritisation methods.

- Pareto principle: in establishing the ‘20’ features to deliver the ‘80’ value.

Complementary models:

- Golden circle: to ensure that new products and features are aligned with a deeper direction.

- Lock-in effect: as a potential consideration in relation to impact.Agile methodology: an iterative approach that works well with this form of prioritisation.

- Personas: as a potential tool to explore and establish reach and impact.

- Zawinski’s law: a warning to prioritise and avoid product bloat.

- Agile methodology: an iterative approach typically requiring fast and ongoing prioritisation.

- Minimum viable product: as an approach to cut out unnecessary features in the first instance.

The RICE Scoring model was developed by Intercom and is outlined in some detail in their original post about it here.

My Notes

My Notes

Oops, That’s Members’ Only!

Fortunately, it only costs US$5/month to Join ModelThinkers and access everything so that you can rapidly discover, learn, and apply the world’s most powerful ideas.

ModelThinkers membership at a glance:

“Yeah, we hate pop ups too. But we wanted to let you know that, with ModelThinkers, we’re making it easier for you to adapt, innovate and create value. We hope you’ll join us and the growing community of ModelThinkers today.”